When the Past Won’t Scan: Queer Historiography and the Algorithmic Archive

How artificial intelligence challenges (and extends) the work of queer historians.

Joseph Golden

10/7/20255 min read

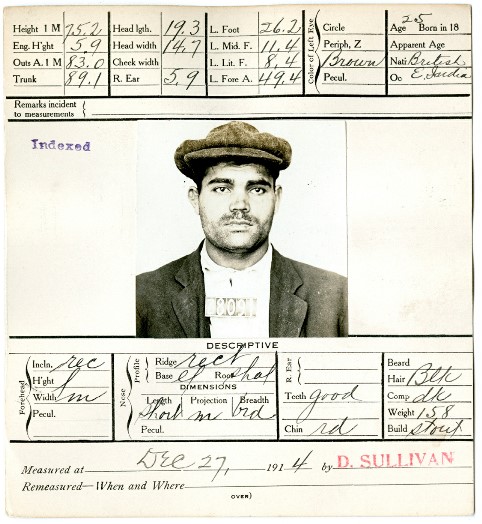

Mugshot of a man arrested in December 1914 for the “infamous crime against nature,” a charge once used to criminalize same-sex relationships. He was sentenced to six years in San Quentin State Prison. Today, this image, preserved by the ONE Archives at the USC Libraries, reminds us how early policing and record-keeping turned bias into data. As artificial intelligence systems increasingly draw on historical archives, it’s vital to recognize how these records reflect injustice as much as they reflect information, and to ensure that the technologies we build do not repeat those harms.

Photo courtesy of Matthew Riemer and Leighton Brown, We Are Everywhere: Protest, Power, and Pride in the History of Queer Liberation, 2019.

Introduction

For generations, queer people have lived in the margins of history. Their letters were burned, their photographs mislabeled, and their love stories buried under euphemism and shame. What survives of queer history often exists not in grand state archives but in the fragile corners of the record: in diaries, police ledgers, gossip columns, and memorial quilts.

Now, artificial intelligence (AI) promises to revolutionize how we access and interpret history. It can process millions of documents in seconds, reconstruct damaged texts, and find patterns invisible to the human eye. Yet the promise of AI also raises profound questions for queer historiography and social justice. Can algorithms, built on data shaped by exclusion, truly recover what history tried to erase? Or will they only reproduce those silences at scale?

Histories the Machine Cannot Read

Queer historians have long recognized that archives are inherently political. They are built by those in power, not those excluded from it. As Michel Foucault taught, “power and knowledge directly imply one another.”² For much of modern history, that governance meant erasure. Letters that revealed desire between men, love poems between women, and records of gender variance were deemed obscene or irrelevant, stripped from public collections, and lost to the record.

The problem, then, is not that data are missing; it’s that they were never collected in the first place. Licata and Petersen noted that historians of homosexuality were forced to rely on “ecclesiastical records, legal documents, [and] medical opinions,” materials produced by those who sought to define and control queer life.³ The task of queer historiography has always been to read against the grain: to locate meaning in silence, irony, and subtext.

Artificial intelligence, by contrast, depends on visibility. It is built to recognize, label, and categorize. It thrives on structured data, not on ambiguity. Guillaume Chevillon, in The Queer Algorithm, argues that algorithms “are not neutral tools but reflect the values, biases, and exclusions built into them.”⁴ To “queer” the algorithm, then, is not merely to add LGBTQ+ labels to datasets but to destabilize the very logic of classification, much as queer historians have long refused to impose modern identities on the past.

The Human Archive

AI can read text, but it cannot interpret longing. It can find patterns, but it cannot feel grief. The documents that make queer history—handwritten diaries, censored letters, coded advertisements—resist automation precisely because they rely on context and emotion. Handwriting-recognition tools stumble on nineteenth-century script and coded phrases like “my dearest friend.” Optical character recognition (OCR) misreads old newspaper columns, skipping over euphemisms such as “disorderly conduct” or “masquerade ball” that once signaled queer life to those in the know.

Even photographs elude machine vision. A nineteenth-century portrait of two women gazing at one another might be tagged as “friends” by a visual recognition model, because algorithms are trained on modern, heteronormative understandings of intimacy. Lauren Tilton and Taylor Arnold refer to this as the presentist bias—when AI “forces the past to resemble the present.”⁵

Historians, by contrast, are translators of silence. Jen Manion’s Female Husbands: A Trans History demonstrates how careful contextual reading can reveal gender variance that is often hidden in plain sight.⁶ The “female husbands” who appeared in court records or scandal sheets between the eighteenth and nineteenth centuries were not anomalies to be classified but people negotiating survival within the limits of language. No algorithm could intuit what Manion calls the “creative labor” of living between genders. Only a historian, attentive to the subtleties of context, can make meaning of such fragments.

1829 portrait of James Allen, entitled "The Female Husband!" London. https://digitalcollections.nypl.org/items/ff996550-c606-012f-8ac2-58d385a7bc34?canvasIndex=0

When Data Becomes Gatekeepers

The danger of AI lies not only in what it cannot read but in what it claims to know. When algorithms declare “no results found,” they risk reaffirming the same archival silences that queer historians have fought to expose. Census data, for example, record households and property but omit the intimacy between cohabiting same-sex partners. A machine learning model might analyze demographic patterns yet remain blind to the tenderness implied in two women “boarding together” or two men “sharing quarters.”

These erasures are not accidental: they are structural. AI systems, trained on vast datasets that privilege completeness and conformity, reproduce the hierarchies of visibility that have long marginalized queer, trans, and nonbinary lives. In this way, the algorithm becomes an extension of the bureaucratic archive: efficient, standardized, and exclusionary.

Projects like Queer in AI, a global collective of queer computer scientists, recognize this danger. Their work demonstrates that community-led, participatory design can make AI more inclusive by centering on lived experiences rather than abstract fairness metrics.⁷ Their model of decentralized organizing parallels the ethos of queer historiography itself: grassroots, relational, and resistant to hierarchy.

Queer Historians as Ethical Coders

If algorithms mirror the values of their makers, then queer historians are already practicing an alternative form of coding—one rooted in interpretation, empathy, and care. They read between the lines of damaged documents, reconstruct erased names, and preserve fragile artifacts that resist digitization. Their methods model what ethical AI could be: reflexive, self-aware, and committed to justice over efficiency.

Queer history reminds us that data are never neutral, that archives carry moral weight, and that knowledge is always entangled with power. AI, despite its considerable capacity, lacks these ethical instincts. It cannot understand why a quilt square sewn for a lover lost to AIDS matters, or why a torn photograph can contain an entire life.

To queer the algorithm, then, is not just to improve its accuracy; it is also to challenge its underlying assumptions. It is to reimagine what counts as knowledge, to build systems that embrace uncertainty, emotion, and contradiction. It is to insist, as queer historians always have, that what is missing is as meaningful as what survives.

The challenge for the digital age is not to teach machines to think like us, but to ensure we do not start thinking like machines.

Notes

Salvatore J. Licata and Robert P. Petersen, The Gay Past (New York: Taylor & Francis, 2014), Kindle loc. 177–182.

Michel Foucault, The History of Sexuality, vol. 1 (New York: Vintage, 1990), 15.

Licata and Petersen, The Gay Past, Kindle loc. 224–228.

Guillaume Chevillon, “The Queer Algorithm,” ESSEC Business School, 2024.

Lauren Tilton and Taylor Arnold, quoted in Moira Donovan, “How AI Is Helping Historians Better Understand Our Past,” MIT Technology Review, April 11, 2023.

Jen Manion, Female Husbands: A Trans History (Cambridge: Cambridge University Press, 2020).

Organizers of Queer in AI et al., “Queer in AI: A Case Study in Community-Led Participatory AI,” arXiv preprint arXiv:2303.16972v3 (June 8, 2023).

Bibliography

Chevillon, Guillaume. “The Queer Algorithm.” ESSEC Business School, 2024.

Donovan, Moira. “How AI Is Helping Historians Better Understand Our Past.” MIT Technology Review, April 11, 2023.

Foucault, Michel. The History of Sexuality, Vol. 1. New York: Vintage, 1990.

Licata, Salvatore J., and Robert P. Petersen. The Gay Past. New York: Taylor & Francis, 2014.

Manion, Jen. Female Husbands: A Trans History. Cambridge: Cambridge University Press, 2020.

Organizers of Queer in AI et al. “Queer in AI: A Case Study in Community-Led Participatory AI.” arXiv:2303.16972v3 (June 8, 2023).

Tilton, Lauren, and Taylor Arnold. Quoted in Moira Donovan, “How AI Is Helping Historians Better Understand Our Past.” MIT Technology Review, April 11, 2023.